Using R for Big Data with Spark

HDRips | MP4/AVC, ~592 kb/s | 1280x720 | Duration: 02:20:02 | English: AAC, 128 kb/s (2 ch) | 731 MB

Genre: Development / Programming

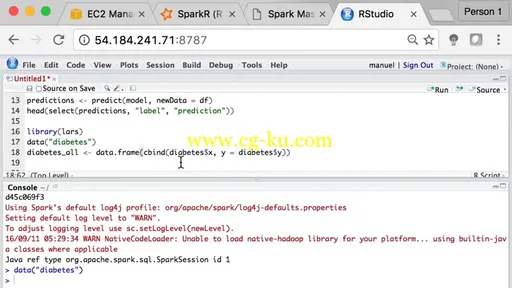

Data analysts familiar with R will learn to leverage the power of Spark, distributed computing and cloud storage in this course that shows you how to use your R skills in a big data environment.

You'll learn to create Spark clusters on the Amazon Web Services (AWS) platform; perform cluster based data modeling using Gaussian generalized linear models, binomial generalized linear models, Naive Bayes, and K-means modeling; access data from S3 Spark DataFrames and other formats like CSV, Json, and HDFS; and do cluster based data manipulation operations with tools like SparkR and SparkSQL. By course end, you'll be capable of working with massive data sets not possible on a single computer. This hands-on class requires each learner to set-up their own extremely low-cost, easily terminated AWS account.

Discover how to use your R skills in a big data distributed cloud computing cluster environment

Gain hands-on experience setting up Spark clusters on Amazon's AWS cloud services platform

Understand how to control a cloud instance on AWS using SSH or PuTTY

Explore basic distributed modeling techniques like GLM, Naive Bayes, and K-means

Learn to do cloud based data manipulation and processing using SparkR and SparkSQL

Understand how to access data from the CSV, Json, HDFS, and S3 formats

发布日期: 2016-10-28